Data-driven Analysis of Hate Speech on German Twitter and the Effects of Regulation

Social media have lately become one of the primary information channels for many individuals, which was exacerbated during the recent pandemic. In Germany, the percentage of individuals who use social media as a source for information has increased steadily from 2013 to 2020, reaching almost 40% in April 2020. As such, social media is a major example of the ambivalence of digitization: On the one hand, social media provide new opportunities for social interactions and political participation. On the other hand, it facilitates the dissemination of extremist thoughts and aggressive or harassing contents. In this context, the widely known term “hate speech” describes the aggressive and derogatory statements towards people who are assigned to certain groups.

According to a representative survey run by Forsa in Germany in 2019, more than 70% of the respondents indicated to have already encountered online hate speech, and this share has likely risen since then. Encountering online hatred not only has negative consequences for the individuals themselves (e.g. emotional stress, fear and reputational damage), but also on the society as a whole: it has been shown that online hate speech translates into further negative real world outcomes such as societal segregation and xenophobic attacks.

In order to reduce the dissemination of online hate speech, the German government implemented the Network Enforcement Act (NetzDG) in October 2017. This law obliges social platforms with more than 2 million users in Germany to delete posts and comments containing clearly hateful and insulting content within 24 hours after they have been reported.

While Germany was the first country to implement a law for regulating user generated content (UGC), other countries and the European Commission also started to regulate UGC in the meantime, mostly with similar approaches. Yet, to the best of our knowledge, no empirical investigation has evaluated the effectiveness of such regulation. This project aims at closing this gap by answering the following Research Question:

Is the Network Enforcement Act effective in lowering the prevalence of hateful content on social networks in Germany?

Project Plan

For addressing this research question, we will conduct empirical studies on one of the world's largest social networks, i.e., Twitter. A data-based understanding of the prevalence of online hate speech and the impact of the NetzDG is crucial for establishing guidelines for developing future successful regulation of UGC in the EU and other countries. This claim is strengthened by the German government, which published an evaluation report of the NetzDG in September 2020, mentioning that due to the lack of data, an evaluation of the quality of the platforms’ decisions to remove or keep content is not yet possible. Since the topic of online hate speech is also prevalent in the public discourse, we expect that the results of this project will encounter high interest of policy makers, the scientific community, as well as the public media.

In preliminary difference-in-difference analyses, we compared the prevalence of hateful content in migration-related tweets in the German and Austrian Twittersphere before and after the introduction of the law in Germany. By doing so, we isolate the causal effect of the law on a variety of measures related to hateful speech according to pre-trained algorithms and special dictionaries for the German language. Our results suggest a significant decrease in the amount of severely toxic tweets and tweets containing identity attacks. Furthermore, the sentiment of the tweets does not seem to be impacted by the NetzDG.

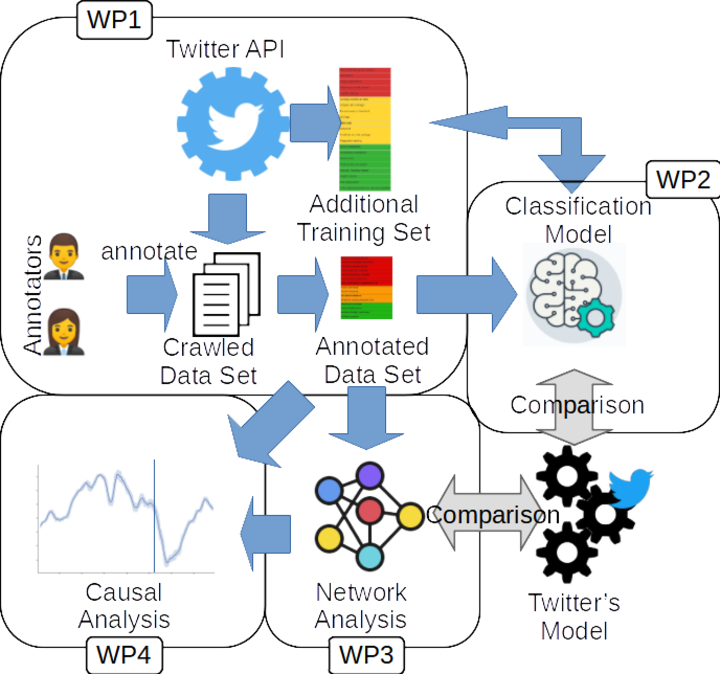

In this project, we aim at validating the results described above by running different econometric specifications. Furthermore, we plan on extending the analysis by a sophisticated and all-encompassing measure of online hate speech. To that end, we want to develop a tool for automated hate speech classification, which could be adapted to other settings and languages for a broad application of the project outcomes. In addition, we plan to conduct a deeper analysis of mechanisms through which the law could potentially impact the spread of online hate in order to derive policy recommendations.

Since the writing time of the research proposal, there have been a few recent developments that may slightly change the focus of the project. With the leverage of the military conflict in Ukraine, social media platforms faced a challenge to introduce governing rules for addressing the problem of hate speech in the context of an ongoing military conflict. Twitter responded to this challenge by introducing a new policy. Further, after Twitter was bought by Elon Musk, there have been radical changes in the company, which may also affect its handling of hate speech. Furthermore, when looking at topics which may involve hate speech, we will also look into recent emerging topics with a high potential for polarization, such as e.g. the above-mentioned war in Ukraine and climate activism.

Project Team

- Raphaela Andres, ZEW and Telecom Paris

- Katharina Ludwig, University of Mannheim

- Heiko Paulheim, University of Mannheim

- Olga Slivko, Erasmus University Rotterdam

Project Funding

The project is funded by the German Fundation for Peace Research (DSF). For further information see also the project web page at the DSF.

Project Results

The following talks have been given about the project:

- Content Regulation and Content Production on Social Media: Evidence from NetzDG. Presented at Digital Economy Workshop by Olga Slivko.

- Analyzing Prorussian Narratives on X. Presented at ZEW Early Ideas seminar by Raphaela Andres.

Furthermore, the project has been introduced in the following outlets:

- Helen Whittle: X: How Elon Musk changed Twitter in one year. Deutsche Welle

- Podcast Tonspur Wissen: Hate Speech. Leibniz Gesellschaft

- Heiko Paulheim: Responsible News Recommender Systems, talk at Responsible AI Symposium, Baden Württemberg Stiftung