WebMall Benchmark for Evaluating LLM-Agents Released

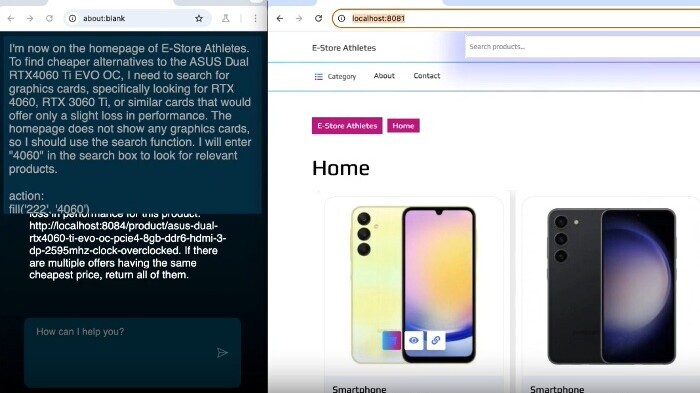

The benchmark features two sets of tasks: A basic task set, which involves common e-commerce actions such as searching for and comparing product offers, adding items to the shopping cart, and completing the checkout process. An advanced task set, which includes more complex challenges such as handling vague user requirements, finding compatible products, or identifying cheaper substitutes.

For each task, the agent is required to visit four e-shops that present heterogeneous product offers through diverse user interfaces. WebMall differs from existing e-commerce benchmarks, such as WebShop, WebArena, or Mind2Web, by 1) requiring the agent to visit multiple e-shops, 2) featuring product offers from different real-world sources, and 3) containing advanced tasks such as finding compatible or substitute products.

Initial experiments using GPT-4.1 and Claude Sonnet 4-based agents, operating on AX-tree representations of webpages, show that the agents achieve success rates of 62% to 75% on the basic task set, while succeeding in only 19% to 41% of the advanced tasks.

More information about the benchmark is found at https://wbsg-uni-mannheim.github.io/WebMall/