Solutions for Automatic Improvement and Enrichment of Knowledge Graphs (LAVA)

How can Artificial Intelligence (AI) be used to make comprehensible decisions? This is the subject of a new project at the DWS group, which has received funding from the Federal Ministry of Education and Research (BMBF).

Knowledge graphs influence our lives on a daily basis and yet they are little known to the public. For example, if you ask a voice assistant for the weather forecast or search for movie recommendations on a streaming platform, they are often used in the background to generate the response. Knowledge graphs are a key aspect of artificial intelligence (AI) and generally describe a model that is used to search for and link information.

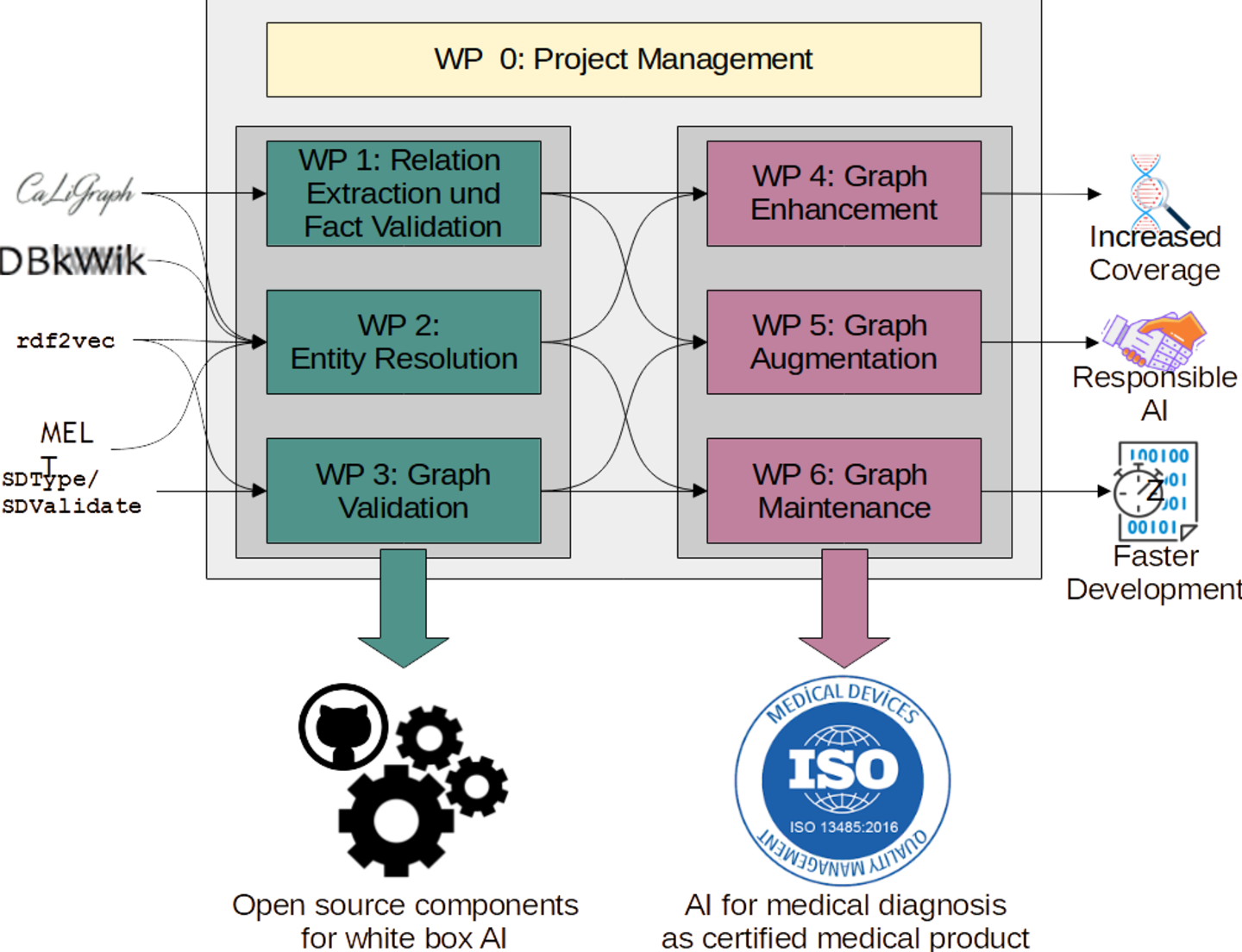

The aim of the LAVA research project led by Prof. Dr. Heiko Paulheim is to automatically create and improve knowledge graphs that are built into AI. The project is conducted in collaboration with the Karlsruhe-based company medicalvalues GmbH, with whom the group has already been cooperating since 2023 in a project for the AI-based detection of diabetes. Medicalvalues specializes in AI solutions for medical diagnostics in laboratories and clinics. In contrast to film recommendations, for example, it is essential that the AI used is reliable and trustworthy.

The project goal of LAVA is a certified medical product that will make it easier for doctors to make quick and precise diagnoses in the future. In the case of a rare disease, for example, data such as X-ray images, blood values, and other relevant measurements are to be brought together and linked in an integrated manner with the help of a knowledge graph in order to make it easier for the doctor treating the patient to decide on further steps. Heiko Paulheim's team is contributing software modules that essentially consist of semi-automated knowledge graphs, which allow this knowledge graph to be kept up-to-date and error-free at all times.

The aim of the project is to provide reusable, well-documented components for white box AI. White-box AI refers to models that make it transparent how decisions are made – for example, by using knowledge graphs that are also understandable for humans. Users can therefore understand what data a decision is based on – unlike with black-box models such as Chat GPT, where it is not possible to understand the answers.